Attitude Control of Reaction Wheel system

|

Inspiration

Have you wondered how satellites reorient themselves in space? Out there in space without any external force, without changing its angular momentum. Now imagine a cubical box suspended by a string. Could such a box rotate on your command, without the aid of propeller based or magnetic or any other external force? If you said 'no', then take a look at this video. Working Whats happening here is that we are hacking the conservation of angular momentum to create an apparent moment/rotation. Inside the box is a rotating body whose momentum can be changed by varying the input to the driving motor. This way if it speeds up its rotation in one direction, the whole cube rotates in the other direction, and hence the name "reaction wheel". For this setup, we used a magnetometer HMC5883L and IMU MPU6050 to get the orientation of the box. We implemented a PID controller on the NodeMCU board. The output of the controller is used drive the motor using a motor driver L293D. Tuning it manually could orient in any desired direction even if disturbed from its orientation. To step it up further, the desired angle of orienting was sent from a smartphone over Wi-fi. The angle that was sent was the phone's orientation with respect to geomagnetic north. By rotating the phone, the cube appears to mirror the phone's yaw motion. This was my bachelors thesis project. Team members: B Suresh, Dennis Joshy, Nithish Gnani, Hari Shankar |

|

Motion Sonification

|

What is this?

Sonification project to synthesise spatial audio based on motion in a visual feed, in real-time. This is for blind users to perceive visual information about their surroundings using sound, when the camera is mounted on their head. This acts as a Sensory Substitution Device(SSD) for seeing through sound. Status and future work The following aspects are currently being worked on with feedback from a blind user.

Inspiration As a cyclist, I constantly look back to perceive vehicles behind and around me. This started as a device to assist me when cycling. Then it turned out to be pretty effective for motion perception in everyday environments. Later I began developing with focus on blind users who could use it to see motion around them using this device. |

* Spatial audio: headphones/earphones recommended

|

Sensor Sonification Module

|

What is this?

This is a module which can give analog audio feedback of entities like resistance, voltage and capacitance between its two terminals and can also measure the absolute values when the signal can be given to a smartphone or a PC via the headphone jack. Once the devices can measure these values, the signal can be transformed to audio, visual and haptic feedback that can be used for interaction. Some of the sensors we used were light dependant resistors, analog accelerometer, ultrasonic sensor, IR sensor, piezosensor and audio mic. We gave the output to a speaker, peizo patch and vibration motors. The basic DIY module is inexpensive (less than $2) and any development on this can have a wider reach to the commons. Featured work : Audio Feedback of Ultrasonic Sensor The output of ultrasonic sensor HC-SR04 was given to the smartphone via the headphone jack. This was heard as an audible frequency that would increase with the proximity of an obstacle. What is of highlight is the sensing of the extrasensorial information (distance) through existing sensory channels (hearing) and this would redefine the type and number of human senses over prolonged use (based on neuroplasticity of the human mind). This can be of great assistance to the visually impaired and a similar theory could be used for various types of information that we as humans wish to get a better intuition of. Publication: Keerthi Vasan G.C, Suresh B, Venkatesan.M (2017) "Agile and cost-effective ultrasonic module for people with visual impairment using a headphone jack: Implications for enhancing mobility aids" In: British Journal of Visual Impairment 35 (3), pp. 275-282 (Link) Video Demonstrations: YouTube playlist Wiki : 3.5mmSensor2phone on GitHub Inspiration This started off with a need to measure analog sensors using a smartphone and using this for interaction. We realized we could hack the headphone jack to facilitate this. Our subsequent work was inspired by Drawdio and Makey Makey (originally made by Jay Silver of the Lifelong Kindergarten group, MIT Media Lab) where your touch would mean something in the digital world. Later our focus moved to Artificial Sense where we augmented certain information into a human sense. Team members: B Suresh, GC Keerthi Vasan (Physics PhD student at UC Davis) |

|

Point-to-control Responsive Lighting

|

What is this?

An interaction involving control of IoT devices at home by pointing at them. We have converted lights and plugs at a regular home to IoT access points that could be controlled using a pointer that we have developed. The demo is the PoC of the interaction. This pointer could also be attached to the Working Each of the access points are a Raspberry Pi Zero devices that get powered from the AC line. The pointer works based on infrared communication driven by a micro-controller on-board. The laser gives visual feedback for pointing and the access points have auditory cues to give feedback on the interaction. A single pointer can be used to control all devices at home. Inspiration It is a very natural action for humans to point using their fingers to spatially emphasise any object. We wanted to extend this natural action to control various devices in the user's surroundings. |

|

|

Smart Parking System (2016)

- Finalist at GE Edison Innovation Challenge 2016, Runners-up in Fidelity hackathon 2015 ~ Team of 5 - ‘Team Chrysalis’: Role - Hardware Prototyping, Preparation of business plan, Final Presentation This is a conceptual prototype of an all round parking solution for large commercial parking lots. It is a technological solution to find a parking space for a car along with navigation to the parking spot. The solution also features complete management software for parking lots including pre-booking, payment, etc. We built a prototype that demonstrated technical feasibility of the said solution in a scaled down parking lot. This involves image processing and localization techniques. This conceptual prototype was presented at the finals of GE Edison Innovation Challenge 2016. |

|

|

Smart City Project (2016)

- Part of ‘Theory and Practice of Sensors and Actuators’ course under Prof. KV Gangadharan, NITK ~ Team of 6: Role - Implementation of Dijkstra's path planning algorithm and MQTT communication on Raspberry Pi A scaled down model of a future autonomous smart city constituted by Automatic Guided Vehicles (AGVs) and robots each undertaking specific tasks such as taxi service, waste handling,etc. The central server coordinates the activities of each of the units without any conflicts. The central server is a Raspberry pi and it communicates with the AGVs using MQTT (Message Queuing Telemetry Transport) protocol. My team (team of 6) is responsible for the central server and for path planning of the AGVs which is done in real time in order to avoid collisions. My role was to implement and test path planning algorithm to take the shortest path with least traffic, namely Dijkstra's algorithm. |

|

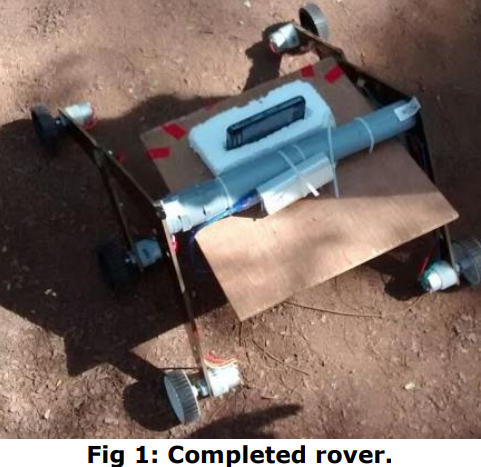

Infinity- The Rover (2015)

- Presented at IES 2016, Kumamoto University, Japan; Exhibited in AstroCommittee, Engineer Tech-fest 2015 ~ Team of 9: Role - Fabrication, electronics, programming, app development, sensor and camera feed to PC Rovers like Curiosity have a rocker-bogie mechanism to achieve all terrain locomotion capability. This ensures continuous contact of all the wheels on the ground at all times which makes it capable of climbing stairs or moving on uneven land. We (team of 9) made a functional rocker-bogie mechanism based robot, controlled independently on all six wheels and it could be controlled wirelessly from an android app(developed using MIT App Inventor) using bluetooth. On the bot was placed a smart phone which houses a camera and a range of sensors. The camera feed was transmitted over wifi onto a PC. The sensor input was also transmitted on the same channel. The input from the accelerometer and gyroscope used to track the bot in real time in 3D (this aspect requires more development). Our work was presented at the 5th International Engineering Symposium - IES 2016. (PDF) Rover with rocker-bogie linkage mounted with an ultrasonic sensor and Bluetooth module |

|

Two wheel Balance Bot(2015)

-- Under the guidance of Prof. Kavi Arya, Professor of Computer Science and Engineering, IIT Bombay A balance bot being an inverted pendulum is an inherently unstable system. Its control was established using a PID controller. A 9-axis IMU placed on the bot collects accelerometer and gyroscope data. Orientation of the bot is obtained using a complementary filter on the data collected by the sensors. This is fed as input to a PID Controller implemented on ATmega 2560 microcontroller coded using embedded C. The output of the PID is mapped to the motor speed. Using Xbee Wireless(RF based) one can get a real time plot of the orientation and the PID output on a Scilab plot. It can also be moved forward and back on PC command. More about the project can be found at: github.com/eyantrainternship/eYSIP2015_Two Wheel Balance Bot / |

|

|

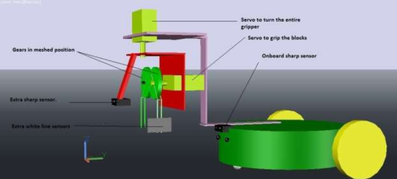

Cargo Alignment Bot (2014)

- Secured first position in eYRC 2014, IIT Bombay among 361 participating teams ~ Team of 4 - ‘Team Chrysalis’: Role - Mechanical design and fabrication We(team of 4) made a robot which is capable of line-following on a grid, and cube detection. My role was to fabricate a mechanism to align the cubes based on a preset convention. The bot is a Firebird 5 robot using ATmega2560. All files can be found at: https://github.com/kevinbdsouza/Cargo-alignment-robot |